The Moment Language Learned to Act: Why Enterprise AI Fails

Executive Summary

What if the moment machines learned to understand language represents the same evolutionary leap as when humans first spoke? This isn't hyperbole - it's the thesis that explains why reportedly enterprise AI projects are failing and what comes next.

Most leaders see AI through an industrial lens: optimization, efficiency, incremental gains. AI operates on probabilistic principles where business systems demand deterministic reliability. It's like asking improvisational artists to follow rigid scripts - technically possible, but you lose the magic that makes them valuable.

The breakthrough lies in understanding entropy - the randomness that sparks AI's creativity. Success requires engineering "controlled chaos": systems that channel AI's stochastic nature while maintaining operational reliability. Companies that master this boundary will build cognitive moats; those that don't will optimize toward irrelevance.

Will you architect this transformation or be transformed by it?

The Magic of First Words

Remember the first time you heard a baby speak their first words? As a parent, that moment is pure magic. Some would argue that watching them take their first steps is equally profound, but... I'd disagree. Those first words represent something fundamentally different - the emergence of the very thing that makes us human.

Human language uses arbitrary symbols to represent objects, actions, and ideas. The word "dog" has no inherent connection to the animal - it's a shared symbol we've collectively agreed upon. But here's what's extraordinary: from this finite set of words and rules, we can create infinite new sentences and ideas. This generative creativity is unmatched in the animal kingdom.

...but wait, that's not it; language allows us to talk about things not immediately present - past events, future plans, hypothetical scenarios - all using limited vocabulary and grammar. It's what pushed philosophers from René Descartes onward to recognize that language enables us to infer the thoughts, intentions, and emotions of others.

I'd argue that language is the most powerful construct we have as humans. It's literally what makes us human.

Human intelligence is essentially a combination of our worldview models (governed by language and culture), the data we're exposed to, and the computing power of our gray matter. Everything that followed in human civilization - art, science, technology, society - emerged because we had language. (Well, I’m not going to get away with that argument easily, am I? You might throw in creativity or intuition, maybe even embodied cognition. Fair. But let’s be honest - language is still the bedrock everything else rests on.)

The Pivotal Moment We're Living Through

To me, AI represents that same pivotal moment in human history, but for machines.

Not long ago, I had a very dear CXO friend tell me that AI is a "how" and not a "what" - a way to optimize existing products and technology stacks. "We plug it into our current systems, get some efficiency gains, and call it a win."

Well, I saw his point - it’s pragmatic and makes sense in the short term. At the same time, it reminded me how naturally we all, as humans, frame new ideas through familiar patterns, biases, and systems...that instinct gives comfort and predictability…we know how to tame this thing (or so we think...).

But it kinda felt like using a fusion reactor to power a steam engine. Technically workable - but missing the bigger leap in possibility.

"What if," I countered, "AI isn't just improving our systems? What if it is a new system altogether? What if language becoming action changes the fundamental nature of what software can do?"

The Pattern Recognition Revolution

But here's what the skeptics are missing when they dismiss AI as just expensive automation.

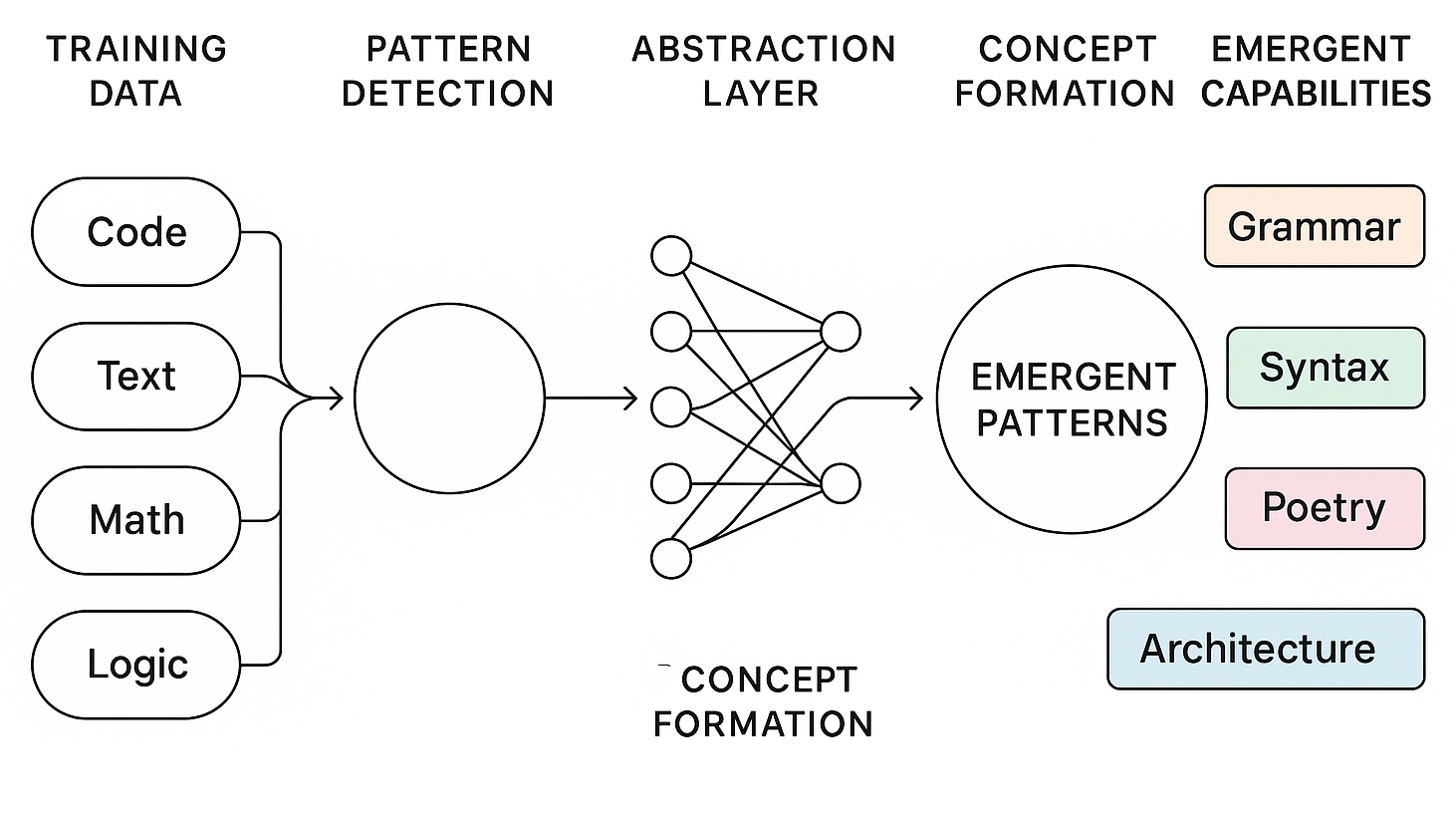

When an unsupervised neuron learns patterns it was never explicitly trained on - when y = w·x + b (…wait, I rush; that’s just the the basic math of a neuron. Simple on its own, but millions of these blocks stacked together create what we call “AI”.) - activates on something completely novel - that’s not incremental improvement. That’s emergence.

And it stops me in my tracks every time I think about it.

We fed these models grammar and literature, but never taught them poetry; they learned it from patterns. We gave them code repositories but never taught them system design; yet they can architect solutions by recognizing patterns across millions of examples. We showed them logical reasoning but never explained humor; yet they can craft jokes that land.

That combination of deep learning, massive data, and scaling laws for compute isn’t incremental improvement; it’s a phase change. For centuries, from Euclid to Babbage to today’s modern applications, humans hand‑coded logic. Now LLMs infer it, identify structures, and generate solutions without being told.

The language of machines is changing - this is the beginning of something fundamentally different.

…but, Yet We Keep Hearing About Disappointments with AI

Just the other day a report claimed 95% of GenAI projects are failing. They’re probably right - but they're also missing the bigger shift: the ground beneath our feet is moving like nothing this industry has ever seen.

The issue isn’t capability. It’s architecture.

Picture conducting the world’s greatest orchestra. Half your musicians are classically trained virtuosos - flawless with sheet music, precise in every note. The other half are jazz artists - improvisers who create magic in the moment.

The classical side is your deterministic systems: the CRM that never loses a record, the database that tracks every transaction, the security stack that executes rules without deviation. Give them clear instructions, and they play with Swiss precision.

The jazz side is your AI systems: LLMs that write poetry, vision models that infer context, agents that synthesize across domains. They don’t follow sheet music. They respond to mood, nuance, intent - and generate something new every time.

Most enterprises are trying to fix the problem by forcing jazz musicians to read sheet music. The result? Robotic outputs - correct on paper, lifeless in essence.

The magic isn’t in choosing order or chaos. It’s in learning to conduct both, simultaneously.

Here’s one example where the misunderstanding shows up. I’ll often hear leaders say, “we’ll replace our ETL pipelines with AI”. It sounds efficient, but kinda misses the point - ETL requires strict schemas, consistent edge‑case handling, and airtight audit trails. AI can assist, but it can’t yet play that role reliably.

Production systems often demand determinism. A financial reconciliation system can’t be “mostly accurate” or “creative.” It must be exact, every time, with full traceability.

This isn’t about being anti‑AI. It’s about orchestration - using the right tool for the right job. And that requires a new architecture.

Engineering Controlled Chaos

So, the question is then: how do you build systems that are simultaneously creative and reliable?

Traditional software engineering taught us to eliminate variability. But AI thrives on what physicists call "controlled chaos" - strategic randomness that sparks creativity while staying within useful boundaries.

Before I share my view on how to do it right… you need to understand what entropy is.

In plain English, entropy is just “how much randomness is in the mix.” In LLMs, it shows up in the probability distribution of the model’s next-word predictions (I know, that’s a geeky mouthful). Low entropy means the model is laser-focused -it's confident about what comes next. High entropy means the model is shrugging - lots of words look equally possible.

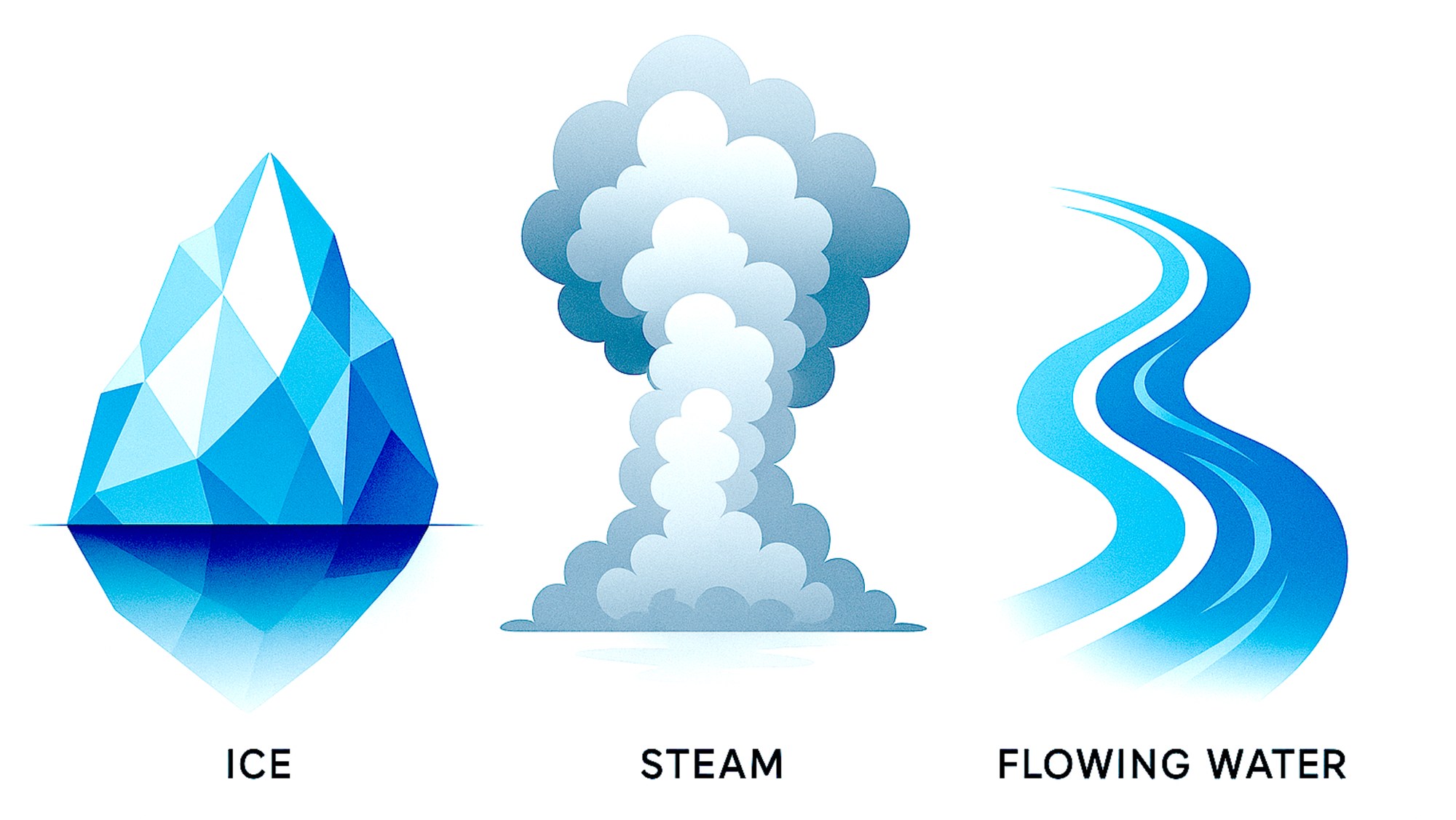

When you’re designing systems that use AI, don’t think only in terms of code and data pipelines. Think in terms of water in its three states.

Ice (temp = 0.0): Crystalline order. Perfect for medical diagnosis, fraud detection, security - anywhere lives or compliance depend on identical outputs every time. The model picks the highest probability word, period.

Flowing Water (temp = 0.3-0.7): The sweet spot for most business use. Customer support (0.3) stays helpful but adds personality. Marketing copy (0.7) needs surprise to engage. The model balances predictability with creativity - controlled chaos channeled toward useful work.

Steam (temp = 0.9+): Maximum entropy for brainstorming and creative exploration. Beautiful for ideation, dangerous for production. The model freely explores low-probability paths, creating unexpected connections.

Most enterprises think the choice is only between ice and steam. The breakthrough is in engineering the flow. This isn’t about surrendering to randomness - it’s about practicing strategic stochasticity.

So… how do we actually engineer controlled chaos?

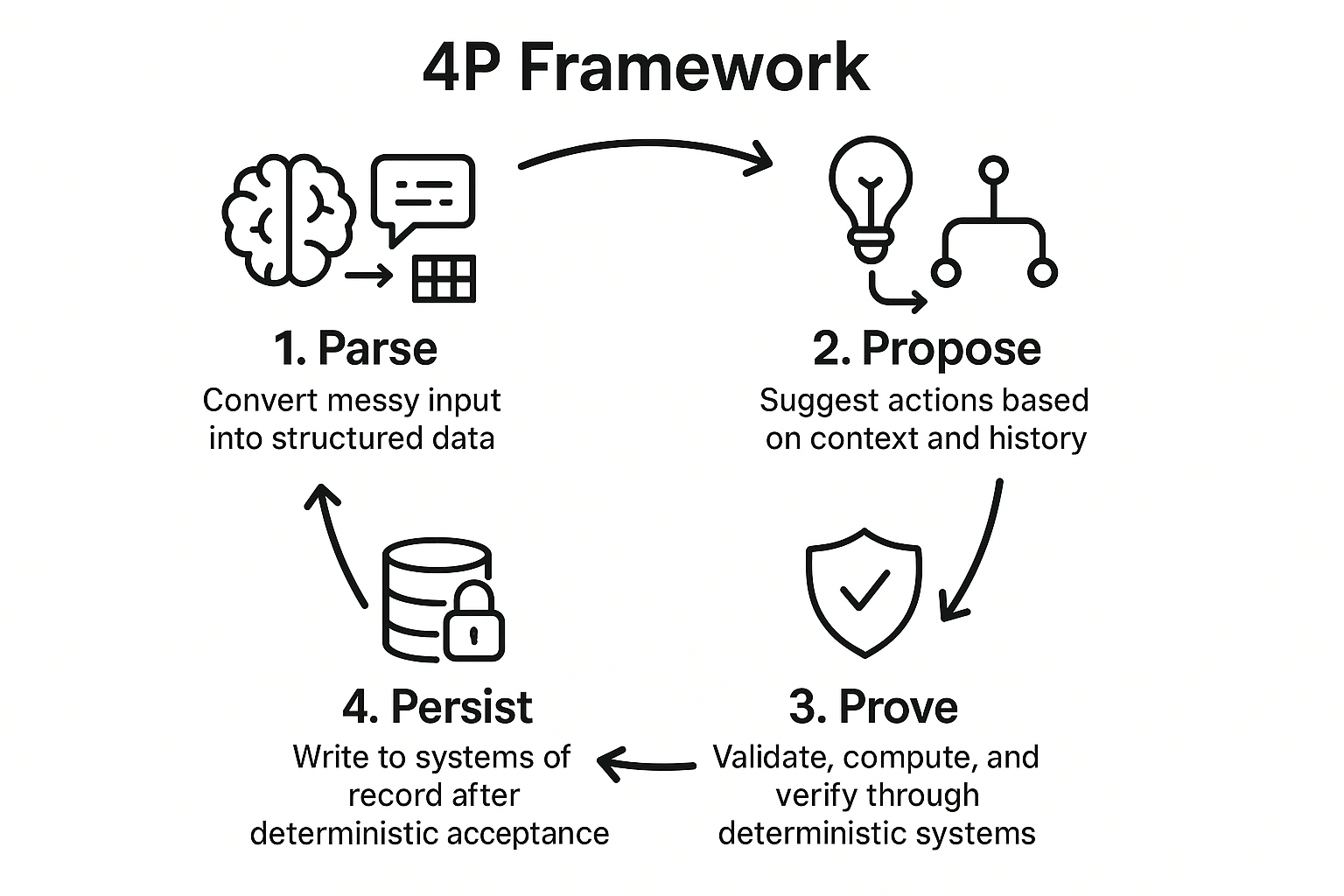

The 4Ps Framework: Solving the Stochastic-Deterministic Divide

Stochastic Front-End + Deterministic Back-End = Actual Value

I've watched too many AI projects sink because teams built on quicksand. They'll debate frameworks for months while missing the point: if you don't control the chaos (Monte Carlo randomness), your system becomes chaos. Every unmanaged probability becomes a production failure waiting to happen.

So I built a playbook: the 4Ps (not Kotler’s, though one is always inspired by him) - four gates that turn stochastic sparks into production‑grade reliability for enterprise AI applications.

Parse: Where Most Teams Trip First

Think of an assistant who’s worked with you for years. When you say “set up something with the pricing team,” they know you mean a formal meeting with an agenda, not an espresso catch-up. That’s parsing: extracting intent with structure.

- Request: “I need something professional but not too formal for my presentation next week.”

- Parsed intent:

{purpose: presentation, style: professional-casual, timeline: 7-days, context: business}

Most teams think parsing is just data cleaning. Wrong. This is your first entropy firewall. Handle ambiguity here - otherwise your system will hallucinate requirements that don’t exist.

Propose: The Confidence Trap

Now imagine that same assistant says, “I’m 85% sure this is right,” but then admits it’s just gut feel. That’s LLMs today. Their “confidence” is probability mass, not statistical certainty. It looks precise, but it’s mathematical theater.

When your system suggests three options, can it tell you which one it’s least certain about - and why? If not, you’re flying blind.

Prove: The Deterministic Firewall Everyone Skips

Before any AI output touches production, it must clear deterministic gates: budget checks, permissions, compliance rules.

valid_action = AI_output ∩ Business_rules ∩ Compliance_constraints

If the intersection is empty, the action is blocked. No exceptions. This is customs control for your AI - passports get stamped or travel stops.

Persist: The Audit Trail Nobody Thinks About

Finally, only write to systems of record after deterministic approval. Every state change - financial transaction, customer update, audit log - must carry its full decision trail.

Here’s how a single request can go sideways in the absence of gates - procurement in this case, but it could just as easily be R&D, Finance, HR, or Marketing.

Example: Think of a procurement request:

“Buy 200 laptops for the new design team.”

- Parse → pulls

{intent: purchase, item: laptops, quantity: 200, cost_center: design, approver: VP_ops}. Miss one of those (say, approver) and compliance is already broken. - Propose → suggests Vendor A, Vendor B, and - why not - Vendor Z (which doesn’t even exist). Without metadata like

{vendor, confidence}, that hallucination can slip through. - Prove → checks finance system: budget cap = $250k. Pulls vendor catalog: Vendor B quote = $600k. Without this deterministic gate, the system would happily click “buy.”

- Persist → only after all checks does it log

{timestamp, user_id, request_hash, rule_results, approver}into SAP. Skip that, and the audit team has no trail of why the purchase was approved.

One seemingly “simple” request - and without the 4Ps, a slick Agentic AI demo becomes a compliance nightmare in production.

⚙️ Technical appendix: 4Ps Implementation notes for tech teams

👀 Others can skip - this note is for engineering teams who need the implementation specifics.

Parse Stage: Use Pydantic models to enforce contracts. Parse emits {intent: str, entities: dict, confidence: float, entropy_setting: float}. Log both the raw input and parsed structure. If parsing fails, route to human queue with failure reason.

Propose Stage: Generate 3-5 candidates at different temperature settings. Each candidate gets metadata: {content: str, temperature: float, tokens_used: int, model_confidence: float, reasoning_trace: str}. Cache by intent hash to avoid regenerating for similar requests.

Prove Stage: Build a validation pipeline with circuit breakers. Each rule (budget, compliance, permissions) returns {passed: bool, rule_name: str, evidence: dict}. If any critical rule fails, reject immediately. Track rule performance - if a rule fails >20% of time, alert for review.

Persist Stage: Write to append-only audit store first, then business systems. Each record includes SHA-256 hash of previous record for tamper detection. Structure: {timestamp, user_id, input_hash, all_candidates, validation_results, final_action, approver}.

Entropy Monitoring: Track temperature settings, output variance (token-level entropy), and confidence distributions per intent class. Alert when variance shifts >2 standard deviations from baseline. Use time-series DB for pattern detection across temperature/variance relationships.

Fallback Implementation: For each stochastic component, maintain deterministic alternatives: regex patterns, rule-based classifiers, or retrieval from curated response templates. Implement timeout thresholds (3-5 seconds per stage). If LLM times out or quality scores drop below threshold, switch to fallback automatically.

The magic happens at that boundary - where human intent meets machine precision. Most enterprises fail because they try to make AI deterministic or accept pure chaos. This framework lets you have both.

Why "Agentic AI" Misses the Point

"But why focus on LLM internals? They’re commodity now. The future is agentic AI."

That perspective sounds compelling, but it skips the foundation. Agents don’t rise above entropy - they run on top of it. Ignore that, and even the most sophisticated “agentic” stack under delivers.

Here’s the reality: whether it’s LangChain, LangGraph, CrewAI, MCPs, RAG - pick your buzzword bingo card - it all collapses to the same thing: context flattened into tokens running through the same attention layers.

This is where I see teams stumble. They treat these frameworks as a silver bullet. It’s become fashionable to sprinkle “agentic” or “MCPs” into every conversation. But unless the foundational design manages entropy, the buzzwords don’t buy you reliability. All the orchestration in the world can’t fix that.

Consider two types of chess players:

The One-Speed Player: Always plays with the same level of risk and creativity, whether they're in a safe opening position or a critical endgame. They might use aggressive, creative tactics when they need precision, or play conservatively when they need to take calculated risks.

The Context-Aware Player: Adjusts their approach based on the board state. They play precisely and safely when ahead (low entropy), take calculated creative risks when behind (medium entropy), and only go for wild, unpredictable moves in desperate situations (high entropy).

Most “agentic” AI today? One‑speed players. Same entropy knob turned to the same setting, no matter the situation. Without an entropy architecture that flexes with context, the agent doesn’t know when to be reliable versus when to be inventive. It isn’t amplifying intelligence - it’s just guessing with style.

Why This Changes Everything

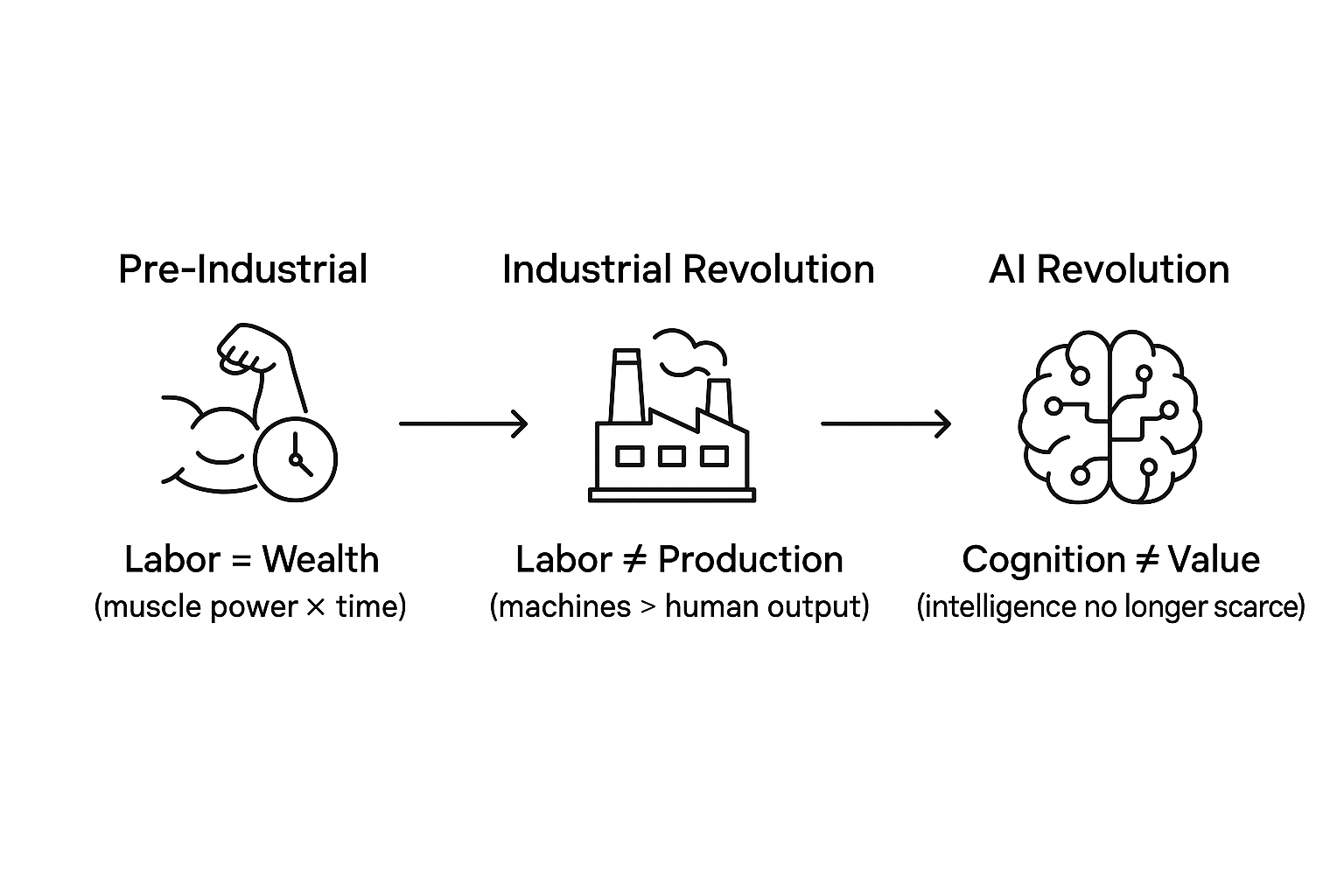

For leaders and boards making decisions about transformative AI investments - this moment reminds me of something Joseph Schumpeter wrote about "creative destruction." Exponential technologies don't just improve existing processes; they obliterate old categories entirely and birth new ones.

History shows us exactly three moments when humanity fundamentally rewrote value creation:

Each time, the winners weren't those who used new technology to optimize old systems. They recognized that new technology enabled entirely different systems to exist.

Henry Ford didn't build a faster horse. He built a different relationship between humans and transportation.

AI isn't building a smarter CRM. It's building a different relationship between humans and information. When language becomes the universal API, when intention directly translates to action, we're enabling entirely new forms of human-machine collaboration.

Strategic Imperatives for Leadership

Traditional advantages such as proprietary data, network effects, and switching costs still matter. What AI introduces is a new kind of defense: cognitive moats. Firms that master entropy engineering are not just building tools, they are crafting systems that learn with every cycle, growing smarter and more adaptive in ways rivals cannot copy.

The real edge will not come from bolting on another model or buying a shinier toolkit. It will come from rewiring the value chain around intelligence amplification.

And the clock is unforgiving. Innovation windows that once stretched across five years now collapse into quarters. The most valuable employees are no longer those who work the old playbooks but those who design AI‑native workflows and translate between human intention and machine execution. Governance built inside the enterprise today will become the advantage when outside regulations finally catch up.

All of this points to a single reality: competitive dynamics are resetting everywhere. The question is not whether AI will transform your industry, but whether you will be the one leading the disruption or the one being redefined by it.

Which brings us back to where we began. Civilization is once again choosing its first words with an invention that will reshape economies and identities. Leaders who recognize this as architectural, not incremental, won't just adopt the vocabulary of the next age - they'll write it.

Have you wrestled with getting AI systems to work reliably in production environments? Or seen the stochastic-deterministic challenge play out in your organization's AI initiatives? I'd love to hear about your experiences with enterprise AI implementations - drop me a line at [muktesh.codes@gmail.com]